Local AI Lab Setup

Pick your platform and get Ollama running before you dive into streaming and performance metrics.1

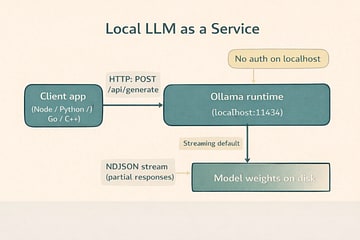

Credit: MethodicalFunction.com.

- Local AI Lab Setup: macOS (Ollama)

- Local AI Lab Setup: Linux (Ollama)

- Local AI Lab Setup: Windows (WSL2 + Ollama)

- Local AI Lab Setup: Docker (Ollama)2

When you're done, return to the main walkthrough: