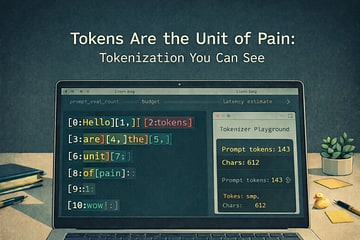

Tokens Are the Unit of Pain: Tokenization You Can See

Tokens are the meter your model charges in: context, latency, and cost. We'll make tokenization visible using your existing local Ollama service—first via a CLI token inspector + heatmap, then with a live-updating browser playground.